Quantum Computing 2026: From Hype to Reality

Navigation

In January 2026, headlines scream about quantum computers “breaking encryption,” “replacing AI,” and “revolutionising everything.” The reality is far messier—and more interesting. Quantum computing has crossed a threshold from theoretical physics to proven engineering benchmarks, but not in the ways pop culture suggests.

This article cuts through the noise and gives you the straight story. Where is quantum really at right now? What changes in the next five years? And how do you spot when someone’s using quantum as a buzzword versus making a genuine technical claim?

The 2026 Inflection Point: Where We Actually Stand

The biggest story of 2025 was Google’s announcement of Willow, a 105-qubit quantum processor that achieved error reduction at scale. For two decades, quantum computing suffered from a fundamental problem: add more qubits, get more errors. Willow flipped that script, showing you can scale quantum systems and reduce errors simultaneously—a milestone called “crossing the error-correction threshold.”

In early 2026, D-Wave reported a scalable quantum annealing system that outperformed classical approaches on real optimisation problems, marking one of the first commercial-style demonstrations of quantum advantage. At the same time, IBM published a fault-tolerant roadmap targeting 2029 for a system with around 200 logical qubits and 100 million error-corrected operations, with ambitions for 1,000 logical qubits in the early 2030s and far beyond after that.

What does this mean? Quantum is transitioning from “theoretically cool” to “practically useful in narrow domains.” In 2025, IonQ and Ansys ran a medical device simulation on a 36‑qubit quantum computer that outperformed classical supercomputers by about 12%, a real-world quantum advantage rather than a lab-only toy benchmark. Google’s Willow completed a benchmark calculation in minutes that would take a classical supercomputer an absurd 1025 years on that specific task.

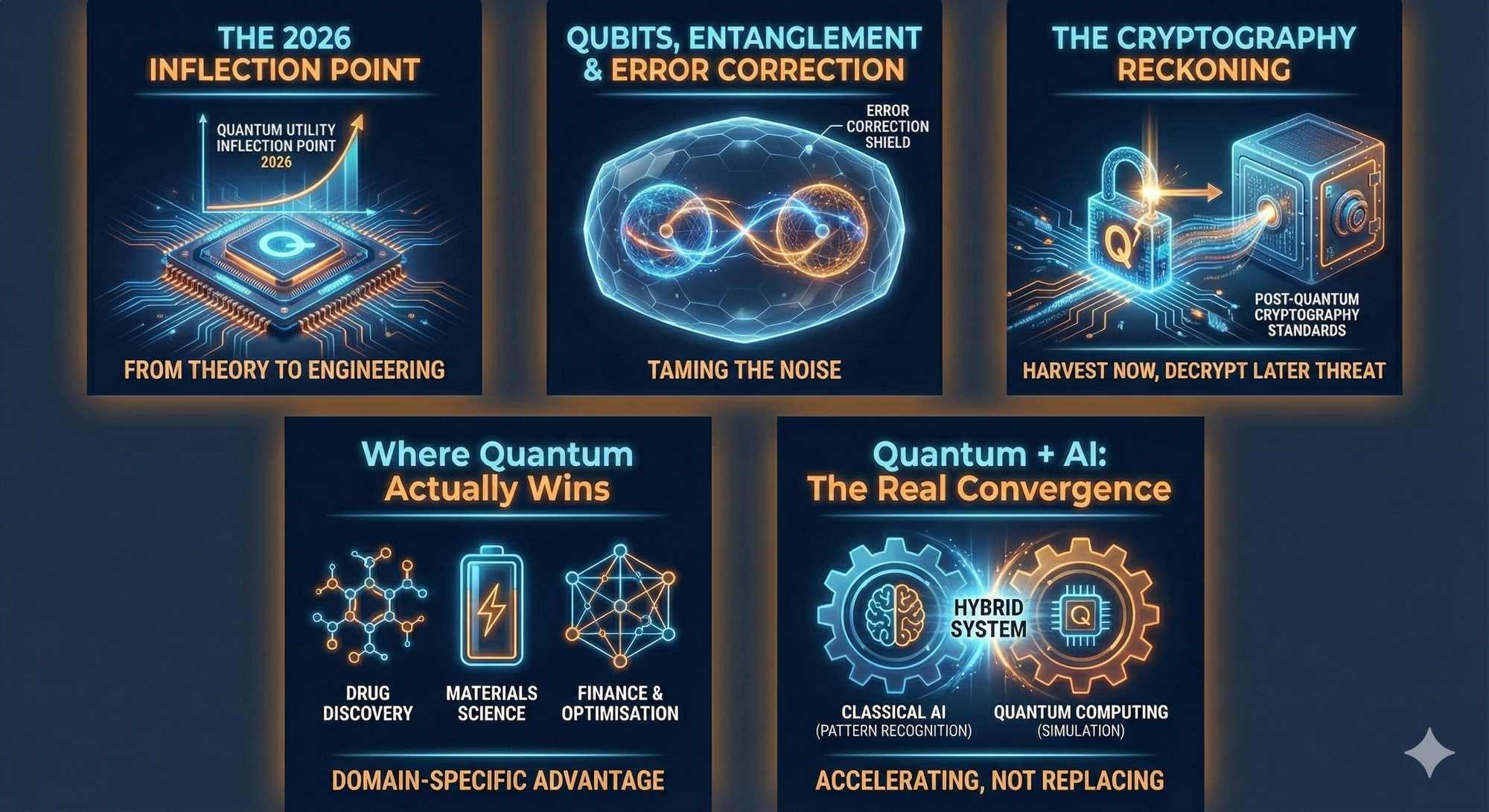

Qubits, Entanglement & the Error Correction Bottleneck

To understand what is actually happening in quantum computing, it helps to see why quantum machines are different from classical ones—and why they are so hard to build at scale.

Classical Bits vs Qubits

Every classical computer—from your phone to a data‑centre server—thinks in bits: switches that are either 0 or 1. All software, video, and AI models boil down to huge patterns of zeros and ones processed very quickly.

A qubit (quantum bit) is different. Thanks to superposition, a qubit can be 0, 1, or a blend of both at the same time until it is measured. A useful picture is a spinning coin: while it spins, it is effectively both heads and tails; only when you catch it and look does it become one or the other.

This is where quantum parallelism appears. One qubit can represent multiple states at once; two qubits can represent four; three can represent eight, and so on. By the time you reach a few hundred high‑quality qubits, the number of states a quantum computer can explore in parallel becomes astronomically large.

Entanglement: The Weird But Useful Part

Entanglement is the quantum property that links qubits together so that the state of one depends on the state of another, even if they are far apart. This “spooky action at a distance” lets quantum algorithms coordinate many qubits to explore and prune vast solution spaces in ways classical machines simply cannot.

That capability underpins the promise of exponential speed‑ups for certain tasks, such as simulating molecules, optimising complex portfolios, or searching very large databases more efficiently than classical algorithms.

The Real Problem: Noise and Error Correction

The catch is that qubits are extremely fragile. Most current devices operate at temperatures close to absolute zero, and small vibrations, stray electromagnetic fields, or random photons can knock qubits out of their quantum state—a process called decoherence.

Because of this, practical quantum computing hinges on error correction. A single reliable “logical” qubit often needs hundreds or thousands of “physical” qubits working together to detect and correct errors fast enough. Google’s Willow and other recent work indicate that scaling while reducing logical error rates is now possible, which is the big engineering milestone everyone was waiting for.

NISQ vs Fault‑Tolerant: Rough Timeline

- 2024–2026: NISQ era. 50–1,000 noisy qubits. Useful for carefully chosen chemistry and optimisation problems; error rates still high.

- 2027–2029: Transition. First systems with dozens to hundreds of logical qubits delivering consistent advantage in narrow domains (chemistry, materials, finance).

- 2030 onward: Scaled fault‑tolerant systems with thousands of logical qubits. Practical impact on cryptography, large‑scale optimisation and some classes of AI workflows.

The Cryptography Reckoning: Harvest Now, Decrypt Later

The loudest headlines are about quantum “breaking the internet.” That is an exaggeration, but there is a real, technical problem behind it.

How Quantum Threatens Today’s Encryption

Much of today’s secure communication relies on public‑key schemes such as RSA and elliptic‑curve cryptography. Their security depends on the fact that factoring large numbers or solving discrete‑logarithm problems is brutally hard for classical computers.

A large, fault‑tolerant quantum computer running Shor’s algorithm could solve these problems dramatically faster, making it theoretically possible to decrypt data protected by widely used keys like RSA‑2048.

The real‑world risk is a strategy called “Harvest Now, Decrypt Later”: attackers record encrypted traffic today with the goal of decrypting it in the future once quantum hardware is powerful enough.

Post‑Quantum Cryptography: Already Rolling Out

To counter this, cryptographers have developed post‑quantum cryptography (PQC)—algorithms designed to resist both classical and quantum attacks. In 2022–2024, NIST selected and standardised several PQC schemes, and governments are now mandating migration.

The EU has published a roadmap requiring member states to start transitioning critical systems by the mid‑2020s, and similar guidance is emerging in the UK and US. Security vendors and cloud providers are adding PQC options to VPNs, TLS, key‑management systems, and hardware security modules.

Crypto Timeline to 2032

- 2026: High‑risk organisations begin serious cryptographic audits and pilot PQC deployments.

- 2027–2028: Mainstream support for hybrid classical + post‑quantum TLS and VPN in browsers, operating systems, and cloud platforms.

- 2029–2031: Conservative horizon when large‑scale quantum attacks on RSA‑2048 become plausible, depending on hardware progress.

- 2032+: Any organisation still relying on pre‑PQC schemes for long‑lived secrets faces measurable risk from harvested data.

Most people do not need to panic: your streaming password or disposable login will not matter in 10 years. But organisations holding sensitive, long‑lived data should be acting now.

Where Quantum Actually Wins: Real Applications

Forget the idea of a “quantum laptop.” Quantum computing will live in data centres and labs, and its early wins are in domains where nature itself is quantum‑mechanical or the search space is absurdly large.

Drug Discovery and Molecular Simulation

Molecules are quantum systems. Classical computers approximate them and quickly hit limits. Quantum machines, in principle, can simulate them more naturally.

In 2025, collaborations between quantum vendors and pharma companies demonstrated early quantum advantage on molecular simulations relevant to drug discovery, hinting at shorter discovery cycles and more accurate predictions of how potential drugs behave.

Battery and Materials Science

Designing better batteries, superconductors and photovoltaic materials is another quantum‑heavy problem. Quantum computers can help explore candidate materials and configurations, potentially leading to higher‑density batteries or more efficient solar cells faster than brute‑force classical search.

Finance, Risk & Optimisation

Financial institutions rely on Monte‑Carlo simulations and complex optimisation to price derivatives and manage risk. Quantum algorithms promise speed‑ups for some of these workloads, especially when combined with clever classical pre‑ and post‑processing.

Logistics and Complex Scheduling

Routing thousands of vehicles, scheduling flights, or optimising factory layouts are all classic combinatorial problems. Quantum annealers and gate‑based machines are being tested on such tasks, sometimes outperforming classical heuristics on specific large instances.

Quantum + AI: The Real Convergence

AI and quantum are often pitched as rivals, but in practice they are complementary: AI is about patterns in data; quantum is about specialised computation.

AI Today Is Entirely Classical

Current large language models, image generators and recommendation systems run on classical hardware—GPUs and specialised accelerators in data centres. None of the AI tools people use daily rely on quantum machines.

Where Quantum Could Help AI

Researchers are exploring quantum machine learning—using quantum circuits to speed up or improve parts of ML workflows. Potential advantages include:

- Faster optimisation of certain model parameters for specialised problems.

- Better handling of very high‑dimensional data in niche cases.

- Combining quantum‑accurate simulations (for example of molecules) with classical ML models trained on the resulting data.

The likely future is hybrid systems: quantum processors act as accelerators for specific sub‑problems, while classical AI does the rest.

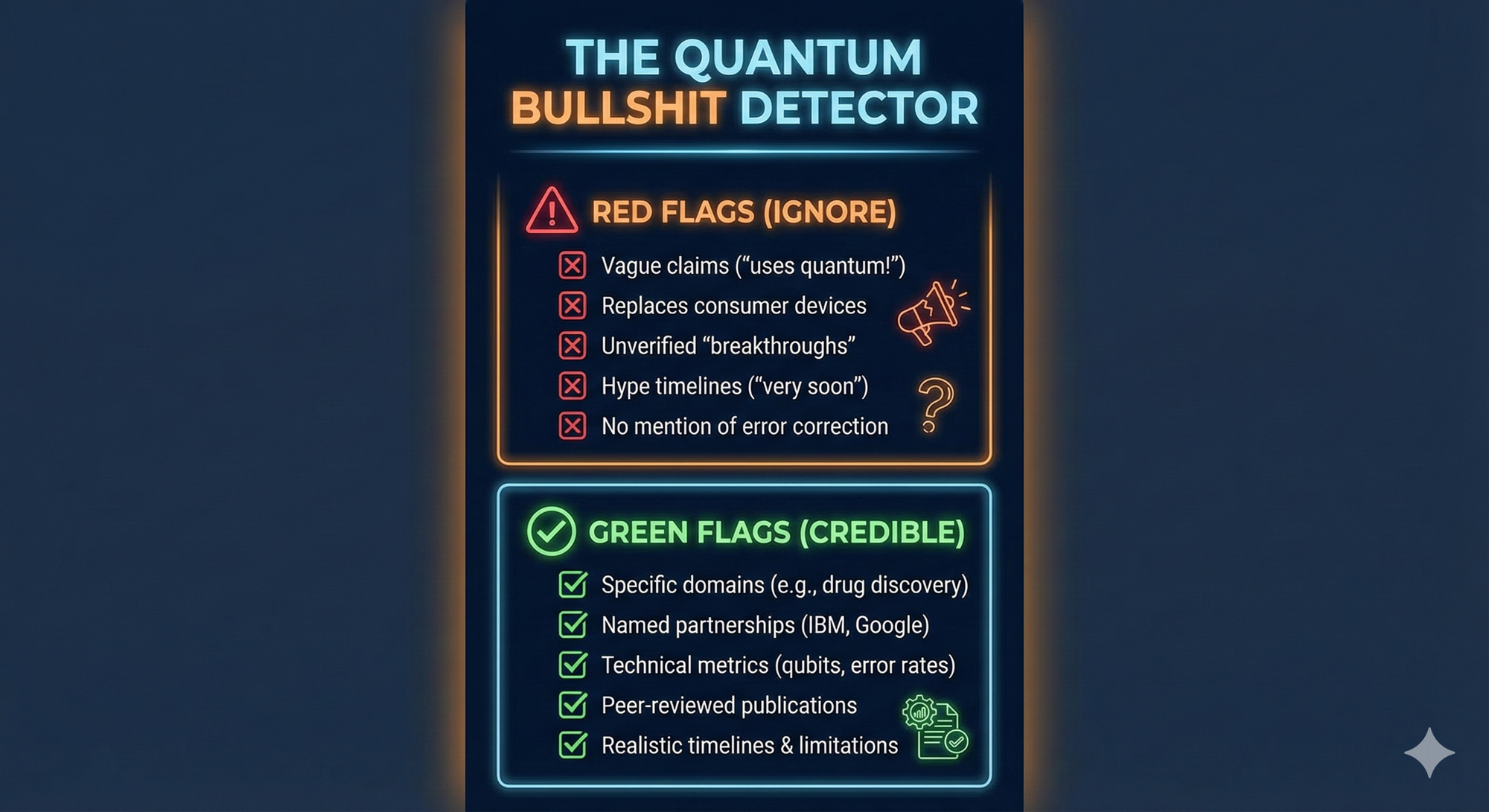

The Bullshit Detector: Spotting Real Quantum Progress

With so much hype, it helps to have a quick mental checklist for evaluating any quantum headline or press release.

🚩 Red Flags – Treat With Caution

- Vague claims like “uses quantum technology” with no explanation of what problem quantum actually solves.

- Promises that quantum will replace consumer devices like phones or laptops.

- “Breakthroughs” announced only via marketing slides, with no paper, benchmark or independent verification.

- Hype timelines such as “very soon” or “within a year” for large‑scale cryptography‑breaking systems.

- No mention of error correction, coherence times, or noise—core challenges in every serious quantum roadmap.

✅ Green Flags – More Likely to Be Credible

- Specific domains mentioned (for example drug discovery, materials science, portfolio optimisation).

- Named partnerships with established players (IBM, Google, major pharma or finance firms).

- Concrete metrics: qubit counts, error rates, coherence times, and benchmark descriptions.

- Peer‑reviewed publications or detailed technical reports alongside press releases.

- Realistic timelines that acknowledge uncertainty and the scale of the engineering challenge.

Reading Headlines Like a Pro

When you see “quantum computer outperforms supercomputer,” look for the fine print: which task, under what assumptions, and is it a contrived benchmark or a real business problem?

Serious progress usually looks boring: incremental improvements in error rates, more stable qubits, slightly larger useful circuit depths. Those are the changes that unlock everything else.

What Changes Between 2026 and 2030

For Security and Infrastructure Teams

- 2026–2027: cryptographic inventories and pilots of post‑quantum schemes become standard for banks, governments and critical infrastructure.

- 2027–2029: major cloud platforms offer PQC‑by‑default options for data at rest and in transit.

- By 2030: regulators increasingly expect quantum‑resistant encryption for long‑lived sensitive data.

For Developers and Technologists

- Quantum‑as‑a‑service platforms—IBM Quantum, Google Quantum AI, Amazon Braket—continue expanding free and low‑cost access for experimentation.

- Frameworks like Qiskit, Cirq and others become more integrated with mainstream tooling, making hybrid quantum‑classical workflows easier to prototype.

- In certain sectors (chemistry, materials, finance), knowing the basics of quantum algorithms becomes a career advantage.

For the Rest of the World

- Better batteries and materials reach products gradually as quantum‑accelerated research feeds into traditional engineering pipelines.

- Drug discovery may accelerate for some classes of disease, though clinical testing still takes years.

- Behind the scenes, more of the encryption protecting long‑term data quietly becomes quantum‑safe.

The Boring Truth Is the Interesting Part

Quantum computing is not a magic box that will replace classical computers or instantly break the internet. It is a demanding engineering project that is starting to pay off in specific, high‑value niches: simulating molecules, optimising complex systems, and nudging cryptography into its next phase.

2026 marks the moment quantum moves from “maybe someday” to “quietly useful in the right places.” Understanding what it actually does—and what it does not do—lets you ignore the hype and focus on the real opportunities.